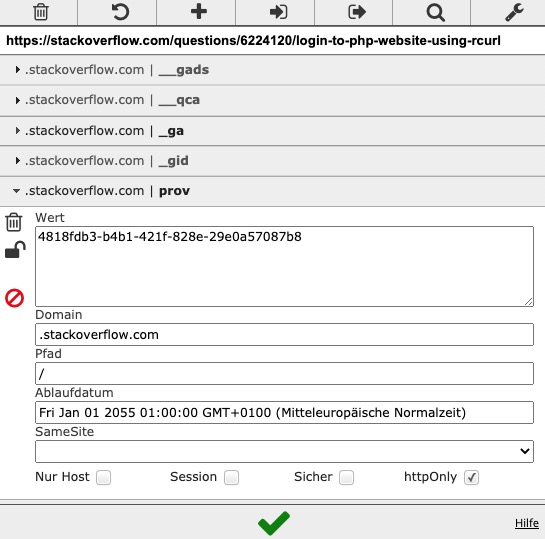

While there are quite some SO examples out there how to manage the login, here are the ncessary steps whenever you need to login in manually and have to start with a browser cookie. First install the “EditThisCookie” plugin in Chrome and export the cookie

to the clipboard.

Then cut and paste the clipboard content into the R editor in the 3rd line

install.packages(c("rvest","stringr","rjson","RCurl")

c.json <- c('

[

{

"domain": "www.domain.de",

"hostOnly": true,

"httpOnly": true,

"name": "MX_SID",

"path": "/",

"sameSite": "unspecified",

"secure": true,

"session": true,

"storeId": "0",

"value": "4e1799f010efa4387874399253695521",

"id": 1

}

]

')

result <- fromJSON(c.json)

fn <- c("˜/cookies.txt")

unlink(fn)

e <- if (!exists("i$expirationDate")) 2147483647 else 0

for (i in result) {

cat( paste0(i$domain,"\t",

"FALSE","\t",

i$path,"\t",

"TRUE","\t",

e,"\t",

i$name,"\t",

i$value ), file=fn, sep="\n", append=TRUE )

}

curl = getCurlHandle (cookiefile = fn,

cookiejar = fn,

useragent = "Mozilla/5.0 (Windows; U; Windows NT 5.1; en - US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6",

verbose = TRUE)

content <- getURL("https://www.domain.de/whatever",curl = curl)

rm(curl)