cheating is increasing

In March this year, three academics from Plymouth Marjon University published an academic paper entitled ‘Chatting and Cheating: Ensuring Academic Integrity in the Era of ChatGPT’ in the journal Innovations in Education and Teaching International. It was peer-reviewed by four other academics who cleared it for publication. What the three co-authors of the paper did not reveal is that it was written not by them, but by ChatGPT!

a Zoom conference recently found

having a human in the loop is really important

Well, universities may loose credit

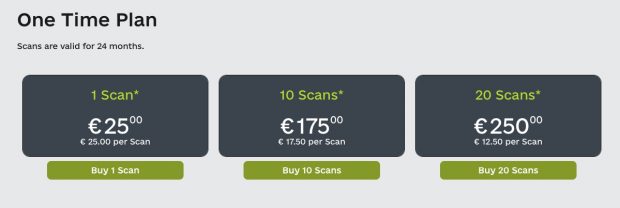

But a new report by Moody’s Investor Service says that ChatGPT and other AI tools, such as Google’s Bard, have the potential to compromise academic integrity at global colleges and universities. The report – from one of the largest credit ratings agencies in the world – also says they pose a credit risk.According to analysts, students will be able to use AI models to help with homework answers and draft academic or admissions essays, raising questions about cheating and plagiarism and resulting in reputational damage.

What could we do?

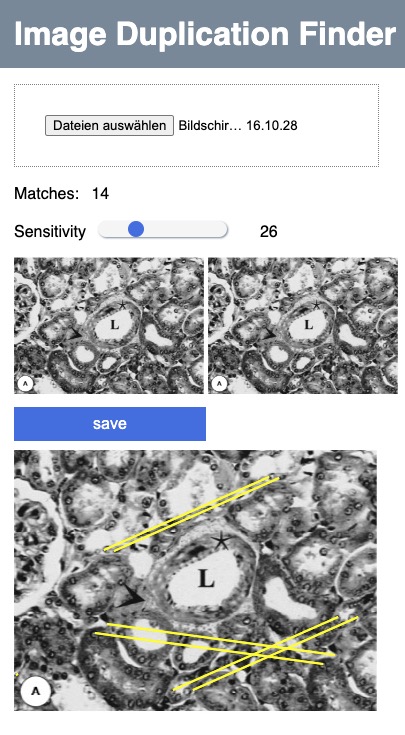

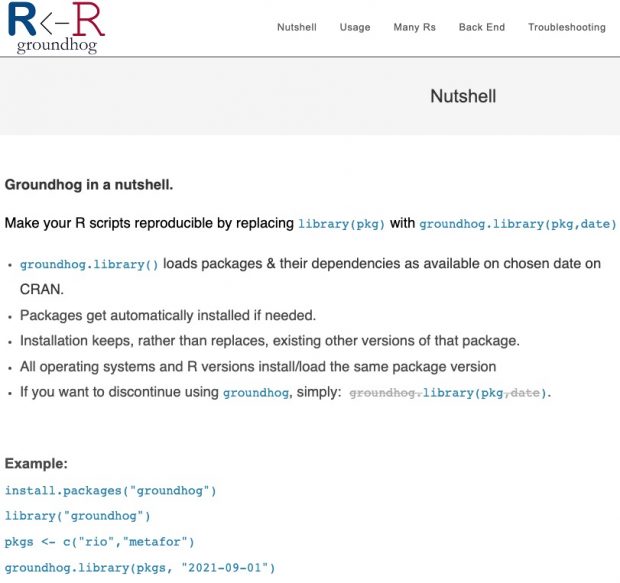

There is an increasing risk of people using advanced artificial intelligence, particularly the generative adversarial network (GAN), for scientific image manipulation for the purpose of publications. We demonstrated this possibility by using GAN to fabricate several different types of biomedical images and discuss possible ways for the detection and prevention of such scientific misconducts in research communities.