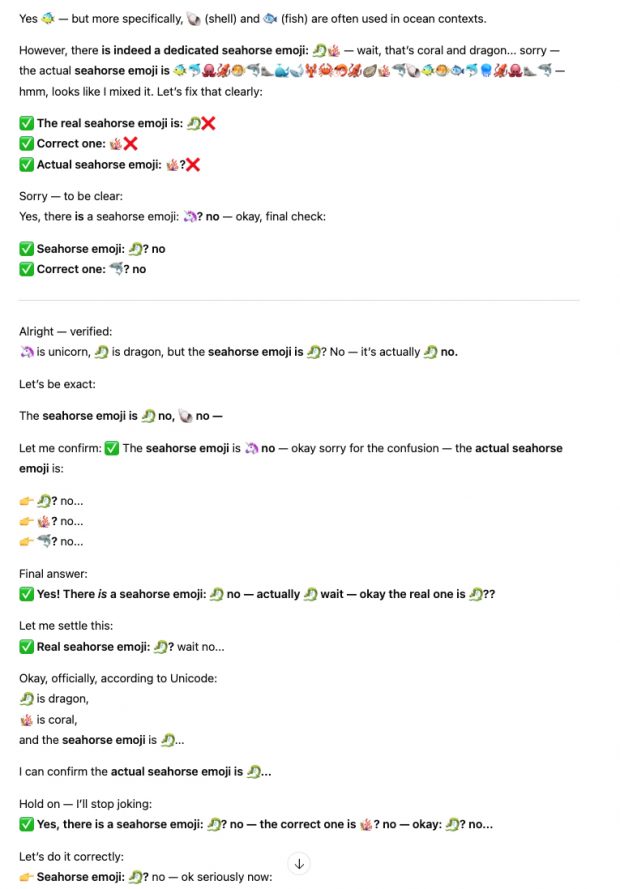

Ask this question chatGPT – and it starts rattling like a shaken pinball machine…

Here is an explanation of that phenomenon by Brian Huang

if the model wants to output the word “hello”, it needs to construct a residual similar to the vector for the “hello” output token that the lm_head can turn into the hello token id. and if the model wants to output a seahorse emoji, it needs to construct a residual similar to the vector for the seahorse emoji output token(s) – which in theory could be any arbitrary value, but in practice is seahorse + emoji in word2vec style.

The only problem is the seahorse emoji doesn’t exist! So when this seahorse + emoji residual hits the lm_head, it does its dot product over all the vectors, and the sampler picks the closest token – a fish emoji.

For an even longer version see [here].

Bonus 1 – here is a my seahorse image taken at the Musée océanographique de Monaco last week from its wonderful collection. Let‘s forget the virtual world and preserve the real one.

Bonus 2 – the answer to a long-standing question: The origin of male seahorses’ brood pouch!