I confess that I worked together with the founder of ImageTwin some years ago, even encouraging him to found a company. I would have even been interested in a further collaboration but unfortunately the company has cut all ties.

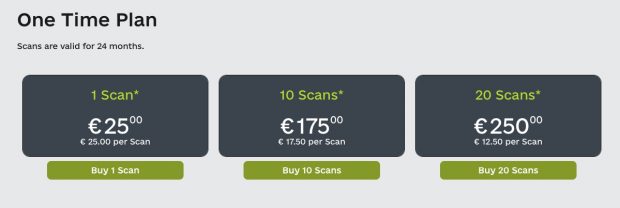

Given my COI – but should we really pay now 25€ for testing a single PDF?

My proposal in 2020 was to build an academic community with ImageTwin’s keypoint matching approach. AI analysis and image depository would be a nice along with more comprehensive reports than just drawing boxes around duplicated image areas.

A new research paper by new ImageTwin collaborators now finds

Duplicated images in research articles erode integrity and credibility of biomedical science. Forensic software is necessary to detect figures with inappropriately duplicated images. This analysis reveals a significant issue of inappropriate image duplication in our field.

Unfortunately the authors of this paper are missing a basic understanding of the integrity nomenclature flagging only images that are expected to look similar. Even worse, they miss duplications as ImageTwin is notoriously bad with Western blots. Sadly, this paper erodes the credibility of forensic image analysis. Is ImageTwin running out of control now just like Proofig?

Oct 4, 2023

The story continues. Instead of working on a well defined data set and determining sensitivity, specificity, etc. of the ImageTwin approach, a preprint by David Sholto (bioRxiv, Scholar) shows that

Toxicology Reports published 715 papers containing relevant images, and 115 of these papers contained inappropriate duplications (16%). Screening papers with the use of ImageTwin.ai increased the number of inappropriate duplications detected, with 41 of the 115 being missed during the manual screen and subsequently detected with the aid of the software.

It is a pseudoscientific study as nobody knows the true number of image duplications. Neither can we verify what ImageTwin does as ImageTwin is now behind a paywall. The news report by Anil Oza “AI beats human sleuth at finding problematic images in research papers” makes it even worse. The news report is just wrong with “working at two to three times David’s speed” (as it is 20 times faster but giving numerous false positives) or with “Patrick Starke, one of its developers”(Starke is a sales person not a developer).

So at the end, the Oza news report is just a PR stunt as confirmed on Twitter on the next day

Unfortunately ImageTwin has now been fallen back to the same league as Acuna et al. Not unexpected, Science Magazine has choosen Proofig for image testing.