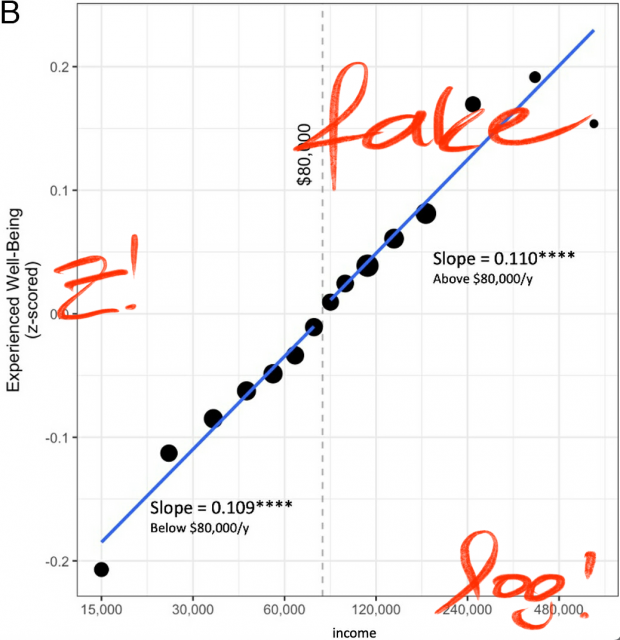

ephorie.de has a convincing re-analysis that Daniel Kahneman is wrong, who purportedly demonstrated that emotional wellbeing increases with income but plateaus at $75,000…

.

.

ephorie.de has a convincing re-analysis that Daniel Kahneman is wrong, who purportedly demonstrated that emotional wellbeing increases with income but plateaus at $75,000…

.

.

The title is clickbait as nobody really knows what is really relevant in science although some people still think that a few failed postdocs at NSC (Nature, Science, Cell) are the ultimate judges here.

Google also did not know in the 1990ies when they invented the pagerank with the now famous words “The prototype with a full text and hyperlink database of at least 24 million pages is available at http://google.stanford.edu”.

Everybody since then believed in the authoritative power of links but according to Roger Montti they are no more relevant as at a recent conference in Bulgaria Google’s Gary Illyes confirmed that links have lost their importance. And maybe even Google at all?

Create a PHP script that can read a CSV in the form start_date, end_date, event and output as ICS file

function convertDate($date)

{

$dateTime = DateTime::createFromFormat('m/d/Y', $date);

if ($dateTime === false) {

return false; // Return false if date parsing fails

}

return $dateTime->format('Ymd');

}

// Function to escape special characters in text

function escapeText($text)

{

return str_replace(["\n", "\r", ",", ";"], ['\n', '\r', '\,', '\;'], $text);

}

// Read CSV file

$csvFile = 'uci.csv'; // Replace with your CSV file name

$icsFile = 'uci.ics'; // Output ICS file name

$handle = fopen($csvFile, 'r');

if ($handle !== false) {

// Open ICS file for writing

$icsHandle = fopen($icsFile, 'w');

// Write ICS header

fwrite($icsHandle, "BEGIN:VCALENDAR\r\n");

fwrite($icsHandle, "VERSION:2.0\r\n");

fwrite($icsHandle, "PRODID:-//Your Company//NONSGML Event Calendar//EN\r\n");

// Read CSV line by line

while (($data = fgetcsv($handle, 1000, ',')) !== false) {

$startDate = convertDate($data[0]);

$endDate = convertDate($data[1]);

print_r($data) . PHP_EOL;

echo $startDate;

if ($startDate === false || $endDate === false) {

continue;

}

$event = escapeText($data[2]);

// Write event to ICS file

fwrite($icsHandle, "BEGIN:VEVENT\r\n");

fwrite($icsHandle, "UID:" . uniqid() . "\r\n"); // Unique identifier

fwrite($icsHandle, "DTSTART;VALUE=DATE:" . $startDate . "\r\n");

fwrite($icsHandle, "DTEND;VALUE=DATE:" . $endDate . "\r\n");

fwrite($icsHandle, "SUMMARY:" . $event . "\r\n");

fwrite($icsHandle, "DESCRIPTION:" . $event . "\r\n");

fwrite($icsHandle, "END:VEVENT\r\n");

}

// Write ICS footer

fwrite($icsHandle, "END:VCALENDAR\r\n");

// Close files

fclose($icsHandle);

fclose($handle);

echo "ICS file generated successfully.";

} else {

echo "Error: Unable to open CSV file.";

}

Source data are from UCI and output is here from where it can be added as a calendar. BTW created also my first “hello world” Swift/iPhone app using this source although this took a bit more time…

attn: this is the chatGPT solution

In LaTeX, you can use the biblatex package along with a bibliography management tool like BibTeX … to manage your references. To cite references generated by Bookends, you would typically export your references from Bookends to a BibTeX (.bib) file and then include that file in your LaTeX document.

Here’s a general outline of how you can cite Bookends references in LaTeX using BibTeX:

1. Export your references from Bookends to a BibTeX (.bib) file.

2. Include the BibTeX file in your LaTeX document using \bibliography{your_bibliography_file}.

3. Cite the references in your document using \cite{} or \autocite{} commands, passing the citation key corresponding to each reference in your BibTeX file.

\documentclass{article}

\usepackage[backend=biber]{biblatex}

% Include your bibliography file

\bibliography{your_bibliography_file}

\begin{document}

Some text \autocite{citation_key}.

% Your content here

\printbibliography

\end{document}

Replace your_bibliography_file with the name of your BibTeX file (without the .bib extension), and replace citation_key with the citation key of the reference you want to cite.

After compiling your LaTeX document with the appropriate compiler (usually something like pdflatex followed by biber and then pdflatex again), LaTeX will automatically format the citations and generate the bibliography according to the style specified in your document.

Make sure to choose a citation style compatible with your field or publication requirements. You can specify the citation style in the \usepackage[style=…]{biblatex} command. Popular styles include apa, ieee, chicago, etc.

Mayn journals requiring consecutive line numbering which is not a problem with MS Word, Open Office or LaTeX but with Pages.

Maybe it is possible to add a new background to PDF? Acrobat can do it (which I would not recommend), there is some crazy script out there (that did not work as well as ). My initial solution

pandoc -s input.rtfd -o output.tex --template=header_template.tex | pdflatex -output-directory=output_directory

with the header_template.tex containing

---

header-includes:

- \usepackage[left]{lineno}

- \linenumbers

- \modulolinenumbers[5]

---

did not work as pandoc cannot read the rather complex rtfd format produced by Pages. Also the next try with rtf2latex2e looked terrible, so I went back to RTF export in Libre Office where I corrected the few Math formulas that were not recognized correctly.

Another option would have been Google Docs – they introduced line numbering recently which is probably the fastest and easiest way to do that.

Whenver it comes to more than one formula, it would be even better to move from Pages to Overleaf (online) or Texifier (local).

I have rewritten my image website last week, collapsing 25 php scripts into 1 while combining it with another 5 javascript files. The interesting point is that most of the server side layout moved now to the client side.

The website was running basically for 20 years while I felt it is too time consuming now to update it one more time always running at risk of some security flaws.

There is now now a true responsive layout, image upload is now done by a web form and no more by FTP. I am also not storing any more different image sizes while all the processing including watermarking is now done on the fly. This required however some more sophisticated rules for site access.

Writing the htaccess rules was the most complicated. thing where even chatGPT failed. Only the rules tester helped me out…

A MUST READ by Tim Berners Lee at Medium

Three and a half decades ago, when I invented the web, its trajectory was impossible to imagine … It was to be a tool to empower humanity.The first decade of the web fulfilled that promise — the web was decentralised with a long-tail of content and options, it created small, more localised communities, provided individual empowerment and fostered huge value. Yet in the past decade, instead of embodying these values, the web has instead played a part in eroding them…

The Semrush blog has a nice summary

By analyzing two main characteristics of the text: perplexity and burstiness. In other words, how predictable or unpredictable it sounds to the reader, as well as how varied or uniform the sentences are.

a statistical measure of how confidently a language model predicts a text sample. In other words, it quantifies how “surprised” the model is when it sees new data. The lower the perplexity, the better the model predicts the text.

is the intermittent increases and decreases in activity or frequency of an event. One of measures of burstiness is the Fano factor —a ratio between the variance and mean of counts. In natural language processing, burstiness has a slightly more specific definition… A word is more likely to occur again in a document if it has already appeared in the document. Importantly, the burstiness of a word and its semantic content are positively correlated; words that are more informative are also more bursty.

Or lets call it entropy? So we now have some criteria

Well, there appear now also AI prologue sentences in scientific literature for example like “Certainly! Here is…”

part I

(sound is poor)

part II

part III

part IV

TBC

At stackexchange there is a super interesting discussion on parallelized computer code and DNA transcription (which is different to the DNA-based molecular programming literature…)

IF : Transcriptional activator; when present a gene will be transcribed. In general there is no termination of events unless the signal is gone; the program ends only with the death of the cell. So the IF statement is always a part of a loop.

WHILE : Transcriptional repressor; gene will be transcribed until repressor is not present.

FUNCTION: There are no equivalents of function calls. All events happen is the same space and there is always a likelihood of interference. One can argue that organelles can act as a compartment that may have a function like properties but they are highly complex and are not just some kind of input-output devices.

GOTO is always dependent on a condition. This can happen in case of certain network connections such as feedforward loops and branched pathways. For example if there is a signalling pathway like this: A → B → C and there is another connection D → C then if somehow D is activated it will directly affect C, making A and B dispensable.

Of course these are completely different concepts. I fully agree with the further stackexchange discussion that

it is the underlying logic that is important and not the statement construct itself and these examples should not be taken as absolute analogies. It is also to be noted that DNA is just a set of instructions and not really a fully functional entity … However, even being just a code it is comparable to a HLL [high level language] code that has to be compiled to execute its functions. See this post too.

Please forget everything you read from Francis Collins about this.

There is a new Science paper that shows

A central promise of artificial intelligence (AI) in healthcare is that large datasets can be mined to predict and identify the best course of care for future patients. … Chekroud et al. showed that machine learning models routinely achieve perfect performance in one dataset even when that dataset is a large international multisite clinical trial … However, when that exact model was tested in truly independent clinical trials, performance fell to chance levels.

This study predicted antipsychotic medication effects for schizophrenia – admittedly not a trivial task due to high individual variability (as there are no extensive pharmacogenetics studies behind). But why did it completely fail? The authors highlight two major points in the introduction and detail three in the discussion

So are we left now without any clue?

I remember another example of Gigerenzer in “Click” showing misclassification of chest X rays due to different devices (mobile or stationary) which associates with more or less serious cases (page 128 refers to Zech et al.). So we need to know the relevant co-factors first.

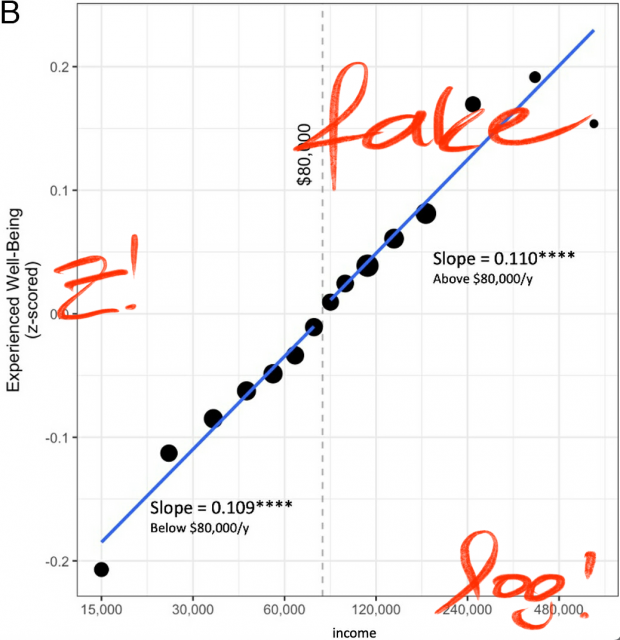

There is even a first understanding of the black box data shuffling in the neuronal net. Using LRP (Layer-wise Relevance Propagation) the recognition by weighting the characteristics of the input data can already be visualized as a heatmap.