I am currently working on a lecture how I make up my mind whenever approaching a new scientific field. Of course we get the first orientation by proven experts, by proven journals and textbooks, then we collect randomly additional material just to increase confidence.

But what happens if there is some deep fake science? A new Forbes article highlights now deep fakes, how they are going to wreak havoc on society and “we are not prepared”

While impressive, today’s deepfake technology is still not quite to parity with authentic video footage—by looking closely, it is typically possible to tell that a video is a deepfake. But the technology is improving at a breathtaking pace. Experts predict that deepfakes will be indistinguishable from real images before long. “In January 2019, deep fakes were buggy and flickery,” said Hany Farid, a UC Berkeley professor and deepfake expert. “Nine months later, I’ve never seen anything like how fast they’re going. This is the tip of the iceberg.”

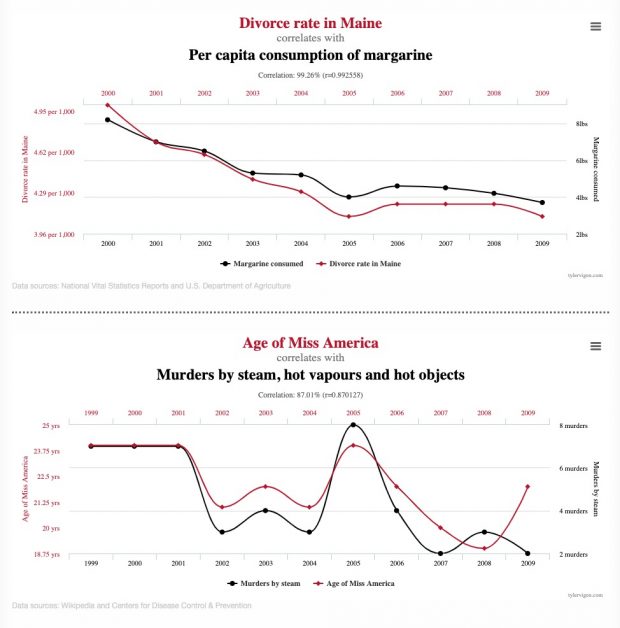

It means that also empirical science can be manipulated as well which will be even hard to detect.

CC-BY-NC Science Surf

accessed 18.02.2026