1. What is all about?

Starting with Corona I have been streaming lectures and church concerts. A Macbook and a n old Chromebook, some old iPhones and Nikon DSLR cameras were connected by HDMI cables to a Blackmagic ATEM mini pro. This worked well although there are many shortcomings of HDMI as it is basically a protocol to connect just screens to a computer and not devices in a large nave.

- cables are expensive and there are several connector types (A=Standard, B=Dual, C=Mini, D=Micro, E=Automotive) where the right length and type is always missing

- it never worked for me more than 15-20m distance even with amplifier inserted

- the signal was never 100% stable, it was lost it in the middle of the performance

- HDMI is only unidirectional, there is no tally light signal to the camera

- there is no PTZ control for camera movement

2. What are the options?

WIFI transmission would be nice but is probably not the first choice for video transmission in a crowded space with even considerable latency in the range of 200ms. SDI is an IP industry standard for video but this would require dedicated and expensive cabling for each video sources including expensive transceivers. The NDI protocol (network device interface) can use existing ethernet networks and WIFI to transmit video and audio signals. NDI enabled devices started slowly due to copyright and license issues but is expected to be a future market leader due to its high performance and low latency.

3. Is there any low-cost but high quality NDI solution?

NDI producing videocameras with PTZ (pan(tilt/zoom) movements are expensive in the range of 1,000-20,000€. But there are NDI encoder for existing hardware like DSLRs or mobile phones. These encoders are sometimes difficult to find, I am listing below what I have been used so far. Whenever a signal is live, it can be easily displayed and organized by Open Broadcaster Software running on MacOS, Linux or Windows. There are even apps for iOS and Android that can send and receive NDI data replacing now whole broadcasting vehicles ;-)

4. What do I need to buy?

Assuming that you already have some camera equipment that can produce clean HDMI out (Android needs an USB to HDMI and iPhones a Lightning to HDMI cable), you will need

- a few cheap CAT6 cables at different lengths (superfluous if you just want a WIFI solution)

- an industrial router with SIM card slots (satellite transmission is still out of reach for semi-professional transmission ;-(

- one or more HDMI to NDI transceiver

- an additional PTZ camera is not required at the beginning

5. Which devices did you test and which do you currently use?

- router: I tested AVM FritzBox , TP Link and various Netgear devices all without RJ45 network ports. I am using now a Teltonika RUT950 with three RJ45 ports as it has great connectivity and a super detailed configuration menu.

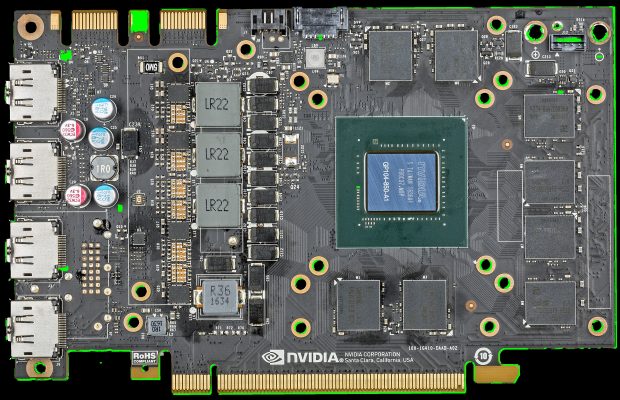

- NDI transceiver:I tried a DIY solution with FFMPEG/Ubuntu, then a Kiloview P2 and a LINk Pi ENC2. I am now using Zowietek 4K HDMI which is giving a stable signal, being fully configurable, silent and can be powered by USB port or PoE.

- PTZ: so far I used a Logitech Pro2, but there is now also a OBSBOT Tail Air in the ball park.

The Teltonika router and the two Zowietek converter cost you less than 500€, the Obsbot also comes at less than 500€ while this setup allows for semi-professional grade live streams.

6. Tell me a bit more about your DSLR cameras and the iPhones please.

There is nothing particular, some old Nikon D4s, a Z6 and a Z8, all with good glass and an outdated iPhone 12 mini without SIM card.

7. Have you ever tried live streaming directly from a camera like the Insta 360 X3?

No.

8. What computer hardware and software do you use for streaming and does this integrate PTZ control?

I use now a 2017 Macbook (which showed some advantage over a Linux notebook). NDI Video has to be delayed due to the latency of other NDI remote sources. I usually sync direct sound and NDI video with the sound being delayed with +450ms in OBS.

Right now I am testing also an iPad app called TopDirector. The app looks promising but haven’t tested it so much in the wild.

PTZ control can be managed by an OBS plugin, while TopDirector has PTZ controls already built in.

9. How much setup time do you need?

Setting up 2 DSLR cameras and 1 PTZ on tripods with cables takes 30-60-90 minutes . OBS configuration and Youtube setup takes another 15 minutes if everything goes well.

CC-BY-NC Science Surf

accessed 16.02.2026