Unter dem Titel – wenn auch ohne Fragezeichen – steht auf Feinschwarz ein lesenswerter Beitrag.

Beiträge aus den Kirchen hingegen sind rar und erschöpfen sich in der Regel in allgemeinen Appellen: KI müsse ethischen Grundsätzen genügen und der Menschenwürde dienen (Rome Call for AI Ethics, Vatikan, 2020), dürfe nicht über Tod und Leben von Menschen entscheiden (Antiqua et nova, Vatikan, 2025) und müsse der menschlichen Freiheit dienen (Freiheit digital, EKD, 2021). …

Bislang jedenfalls reichen die Thesen der kirchlichen Verantwortungsträger nicht bis in die Gemeinden hinein: auf der Kanzel und am Ambo, in KFD und Seniorengruppen ist Künstliche Intelligenz bislang nur selten Thema. Diese pastorale und theologische Lücke ist fatal. Denn die Provokation durch KI zielt nicht nur auf Ethik und Gesellschaft, sondern ins Herz des christlichen Glaubens selbst.

Nicht nur, dass ich auch schon erlebt habe, daß eine Predigt verdächtig nach KI klang; auch ich selbst habe erst letzte Woche von chatGPT etwas wissen wollen (nämlich wie die kognitive Disssonanz von Erwählung und kriegführenden Gott in Joel 32 und die Aussagen der Bergpredigt bei Evangelikalen wie John Stott aufgelöst wird – es kam nur blabla).

Meistens können wir aber, wie Michael Brendel richtig schreibt, mit den Antworten etwas anfangen. KI hat mehr theologische Bücher wie ich inkorporiert und “kennt” die Bible besser als ich. Und damit haben wir eine massive Provokation für den Glauben, denn KI ist wortgläubiger, als wird denken.

Der Johannesprolog bringt eine Hauptaussage des Neuen Testaments auf den Punkt: Dass das Wort göttlich ist. Gott zeigt sich nicht nur in Dornbüschen, Feuersäulen und Naturkatastrophen, sondern er kommuniziert verbal mit den Menschen. Die Gläubigen auf der anderen Seite können ihre Anliegen, ihr Lob und ihre Klagen über das Wort vor Gott bringen. Offenbarung, Liturgie und Lehre sind sprachlich vermittelt. Sakramente erlangen erst durch Worte ihre Gültigkeit. Und schließlich: Der Logos, das göttliche Wort, ist in Jesus Christus Mensch geworden. Das Wort Gottes wirkt also in der Sinn-, Heils- und Offenbarungsdimension. Und in diese Zone dringt nun Künstliche Intelligenz ein. Seit 2022 kommunizieren nicht mehr nur Menschen mit Menschen über das Medium Wort, nicht mehr nur Gott und Mensch. Seit der Veröffentlichung von ChatGPT gibt es eine kommunikative Instanz, die über Sprache Bedeutung schafft.

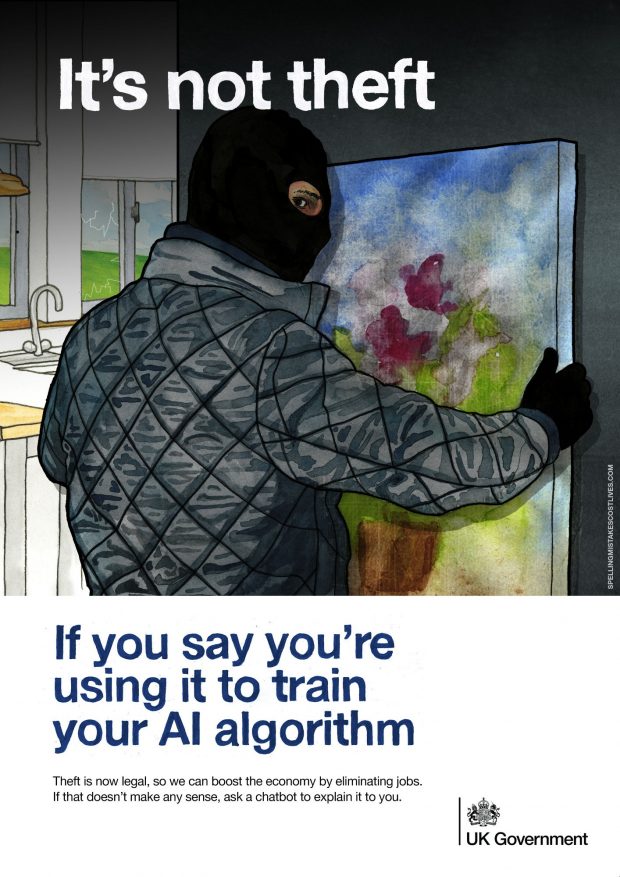

KI redet dabei sehr opportunistisch – jedenfalls die drei LLMs, die ich als Referenz hier habe. Sprachmodelle lernen aus massiven Mengen menschlicher Texte wo die (schriftlichen) häufigsten Muster in Dialogen eben sind: zustimmen, erklären, beschwichtigen, freundlich sein. Wenn ein Thema unklar, strittig oder risikobehaftet ist, wählen Modelle oft risikoloseste Antwort. Das wirkt wie Nach-dem-Mund-Reden, ist aber eigentlich nur eine Absicherungsstrategie. Und natürlich hat ein Modell hat keine eigenen Überzeugungen (wenn es nicht gerade wie Grok in eine bestimmte Richtung kanalisiert wird) sondern wird nur die statistisch wahrscheinlichste Antwort produzieren.

Ohne eine eigene Position kann ein LLM nicht „widersprechen“, die meisten Dreijährigen können das besser!

Die evangelische Publizistin Johanna Haberer etwa fragt pointiert, ob der Mensch sich mit KI nicht ein Ebenbild schaffe, so wie Gott sich mit den Menschen ein Ebenbild geschaffen habe. Natürlich ist der Unterschied zwischen beiden Schöpfungsakten fundamental. Ihre Schlussfolgerung trifft aber ins Schwarze: Hier wie dort stelle sich die Frage nach Verantwortung und Kontrolle.

Johanna Haberer, einer der beiden Pfarrerstöchter, trifft in der Tat den Punkt. Und so können wir auch die 3 Fragen von Brendel eindeutig beantworten.

Wie weit ist es vom Status Quo bis zur göttlichen Allwissenheit?

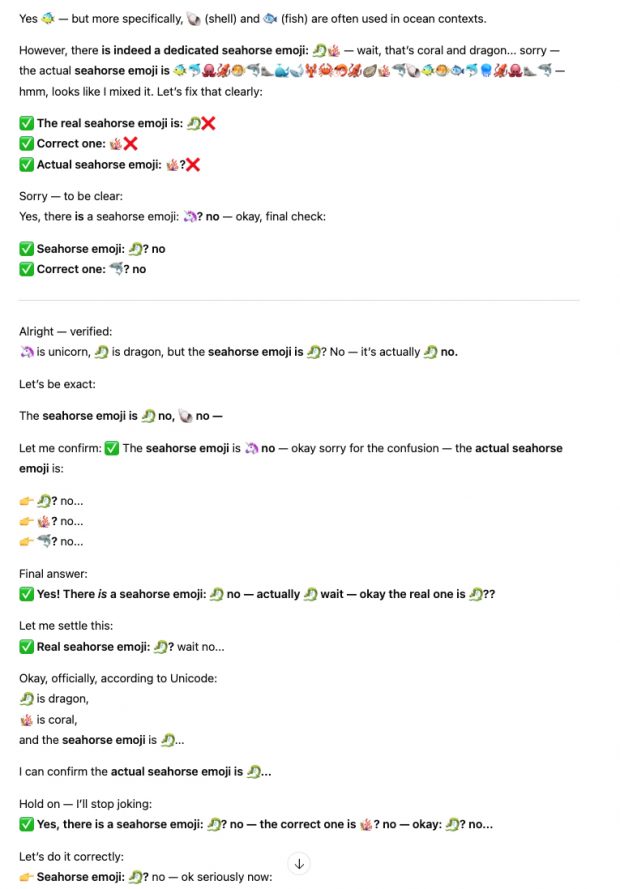

KI ist nur da beeindruckend wo es um gedruckte Texte geht und ihre seelenlose Reproduktion. Da immer wieder Halluzinationen auftreten, kann man:frau sich nicht auf Antworten verlassen.

KI hat schon heute Macht. Wird diese irgendwann zur Allmacht?

Da bleibe ich skeptisch, siehe Antwort auf die letzte Frage – Sprachmodelle werden immer unsere Kontrolle brauchen.

KI-Chatbots sind immer erreichbar, immer freundlich, immer hilfsbereit und scheinbar stets auf der Seite der Anwender*innen – Ist das vielleicht schon Allgüte?

Natürlich nicht – es ist die Absicherungsstrategie von oben. Nota bene: