Auf Science Twitter ist die Stimmung am Tiefpunkt nachdem Wissenschaftler scharenweise den Dienst verlassen.

obwohl doch klar ist, daß Continue reading Katzenjammer I

Auf Science Twitter ist die Stimmung am Tiefpunkt nachdem Wissenschaftler scharenweise den Dienst verlassen.

obwohl doch klar ist, daß Continue reading Katzenjammer I

How to save hundreds of $$$ paying a predatory publisher: Use the preprint form at Github and create your own PDF that can be uploaded to OSF or any other preprint server for free.

brew install --cask vscodium brew install --cask mactex

and continue with the instructions here…

I also wrote about X without knowing why. Here is the answer.

Ein mathematischer Gottesbeweis geht zurück auf Guido Grandi und wurde vor kurzem wieder mal ausgegraben von u/Prunestand bzw Cliff Pickover.

0 = 0 + 0 + 0 + ... = (1-1) + (1-1) + (1-1) + ... = 1 - 1 + 1 - 1 + 1 - 1 + ... = 1 + (-1 + 1) + (-1 + 1) + (-1 + 1) + ... = 1 + 0 + 0 + 0 + ... = 1

was letztendlich einer Schöpfung aus dem Nichts entspricht wo doch eigentlich “nihilo ex nihilum” gilt.

Die Grandi-Reihe hat nur leider keinen Summenwert, weil sie nicht konvergent ist. Anders gesagt

0 + 0 + 0 + ... # N Elemente (1-1) + (1-1) + (1-1) + ... # 2N Elemente 1 - 1 + 1 - 1 + 1 - 1 + ... # 2N Elemente 1 + (-1 + 1) + (-1 + 1) + (-1 + 1) + ... # 2N + 1 Elemente 1 + 0 + 0 + 0 + ... # N + 1 Elemente

dh die 4. Zeile enthält eine falsche Aussage.

Frustriert oder erleichtert? Auf arXiv geht es weiter mit Gödels Gottesbeweis.

Another famous article from the past: P. W. Anderson “More is different” 50 years ago

… the next stage could be hierarchy or specialization of function, or both. At some point we have to stop talking about decreasing symmetry and start calling it increasing complication. Thus, with increasing complication at each stage, we go up on the hierarchy of the sciences. We expect to encounter fascinating and, I believe, very fundamental questions at each stage in fitting together less complicated pieces into a more complicated system and understanding the basically new types of behavior which can result.

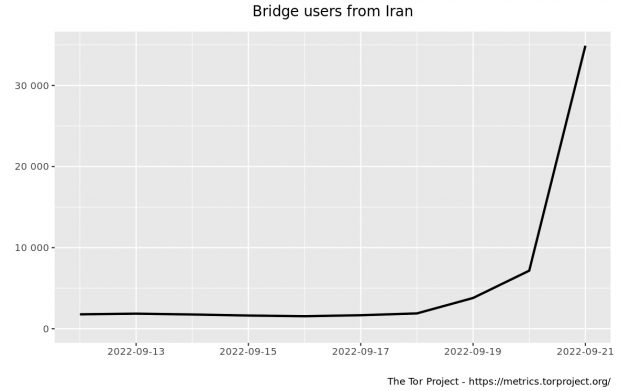

Raspberry Pis are small single-board computers. As I have two of these devices already up and logging solar power production on my roof they could do even more: They are not only supporting a green environment but can also help people from countries with repressive governments by installation of another software package.

Snowflake is a system to defeat internet censorship. People who are censored can use Snowflake to access the internet. Their connection goes through Snowflake proxies, which are run by volunteers.

Setup takes only 2 minutes – full instructions are at kuketz-blog.de

# sudo apt-get install git # sudo apt-get install golang git clone https://git.torproject.org/pluggable-transports/snowflake.git cd snowflake/proxy go build nohup /home/pi/snowflake/proxy/proxy > /home/pi/www/snowflake.log 2>&1 &;

and this is the result

2022/09/25 17:44:19 In the last 1h0m0s, there were 16 connections. Traffic Relayed ↑ 33 MB, @ 5 MB. 2022/09/25 18:44:19 In the last 1h0m0s, there were 27 connections. Traffic Relayed ↑ 170 MB, @ 48 MB. 2022/09/25 19:44:19 In the last 1h0m0s, there were 11 connections. Traffic Relayed ↑ 61 MB, @ 28 MB. 2022/09/25 20:44:19 In the last 1h0m0s, there were 19 connections. Traffic Relayed ↑ 90 MB, @ 27 MB. 2022/09/25 21:44:19 In the last 1h0m0s, there were 10 connections. Traffic Relayed ↑ 41 MB, @ 12 MB. ...

Time to revisit the groundbreaking 1997 @mgoldh paper in Wired “Attention Shoppers! The currency of the New Economy won’t be money, but attention”

As is now obvious, the economies of the industrialized nations – and especially that of the US – have shifted dramatically. We’ve turned a corner toward an economy where an increasing number of workers are no longer involved directly in the production, transportation, and distribution of material goods, but instead earn their living managing or dealing with information in some form. Most call this an “information economy.”

I could not find any plugin but a step-by-step guide at a blog commentary

1. Download your notebook as .ipynb file

2. Upload to https://jsvine.github.io/nbpreview/

3. Open your browser dev tools and copy the element id=”notebook-holder” from the DOM

4. Paste it in your WordPress post as raw text

5. Grab CSS from https://jsvine.github.io/nbpreview/css/vendor/notebook.css

Maybe a true notebook in an iframe is a better solution?

As an avid PubPeer reader, I found a new entry by Elisabeth Bik recently about Andreas Pahl of Heidelberg Pharma who has already one retracted and several more papers under scrutiny.

Unfortunately there are now also many asthma trash papers from paper mills. Another example was identified by @gcabanac, distributed by @deevybee and published at Pubpeer.

Using the link https://twitter.com/search-advanced also advanced search is possible while I run a remote backup with archive page and any local copies using GoFullPage. Also Likers Blocker is recommended.

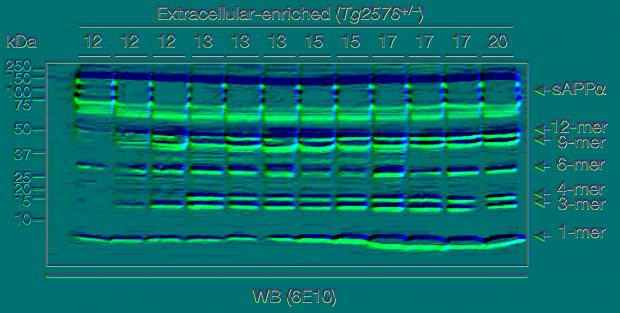

The amyloid analysis published in Nature has been commented at PubPeer and also earned a commentary of Charles Piller in Science. His “Blots on a field” news story is leading now even to an expression of concern by Nature.

The editors of Nature have been alerted to concerns regarding some of the figures in this paper. Nature is investigating these concerns, and a further editorial response will follow as soon as possible.

IMHO there are many artifacts including horizontal lines in Fig 2 when converting the image to false color display. I can not attribute the lines to any splice mark and sorry – this is a 16 year old gel image.

Basically an eternity has passed in terms of my own camera history with 5 generations from the Nikon D2x to the Z9.

So don’t expect any final conclusion here as long as we cannot get the original images.

Here is my favorite R list of packages

library(DataExplorer) plot_str(iris) plot_bar(iris) plot_density(iris) plot_correlation(iris) plot_prcomp(iris)

see also

devtools::install_github('https://github.com/paulvanderlaken/ppsr')

score(iris, x = 'Sepal.Length', y = 'Petal.Length', algorithm = 'glm')[['pps']]

while for robustness of models I use

devtools::install_github("chiragjp/quantvoe")

library(quantvoe)

Python versions come here or here.

My beginner experience here isn’t exhilarating – maybe others are suffering as well from poor models but never report it?

During the training phase the model tries to learn the patterns in data based on algorithms that deduce the probability of an event from the presence and absence of certain data. What if the model is learning from noisy, useless or wrong information? Test data may be too small, not representative and models too complex. As shown in the article linked above, increasing the depth of the classifier tree increases after a certain cut point only the training accuracy but not the test accuracy – overfitting! So this needs a lot of experience to avoid under- and overfitting.

What is model degradation or concept drift? It means that that the statistical property of the predicted variable changes over time in an unforeseen way. While the true world changes – maybe political or by climate or whatsoever – this influences also the data used for prediction making it less accurate. The computer model is static representing the time point when the algorithm has been developed. Empirical data are however dynamic. Model fit need to be reviewed in regular intervals and again this needs a lot of experience.

spiegel.de reports a fatal accident of a self driving car.

In Kurve auf Gegenfahrbahn geraten

Ein Toter und neun Schwerverletzte bei Unfall mit Testfahrzeug

Vier Rettungshubschrauber und 80 Feuerwehrleute waren im Einsatz: Bei einem Unfall auf der B28 im Kreis Reutlingen starb ein junger Mann, mehrere Menschen kamen schwer verletzt ins Krankenhaus.

Is there any registry of these kind of accidents?

and the discussion on responsibility

The first serious accident involving a self-driving car in Australia occurred in March this year. A pedestrian suffered life-threatening injuries when hit by a Tesla Model 3, which the driver claims was in “autopilot” mode.

In the US, the highway safety regulator is investigating a series of accidents where Teslas on autopilot crashed into first-responder vehicles with flashing lights during traffic stops.